Cloud hosting and cloud storage is all the rage, but there are still some common pitfalls that many organizations overlook. In this blog post, I will walk through an issue that seems to be coming up a lot - exposed Amazon S3 buckets. Amazon Simple Storage Service (S3) provides the ability to store and serve static content from Amazon's cloud. Businesses use S3 to store server backups, company documents, web logs, and publicly visible content such as web site images and PDF documents. Files within S3 are organized into 'buckets', which are named logical containers accessible at a predictable URL. Access controls can be applied to both the bucket itself and to individual objects (files and directories) stored within that bucket.

A bucket is typically considered “public” if any user can list the contents of the bucket, and “private” if the bucket's contents can only be listed or written by certain S3 users. This is important to understand and emphasize. A public bucket will list all of its files and directories to an any user that asks.

Airbnb houses backup data and static files on Amazon S3, including over 10 petabytes of user pictures. As a born-in-the-cloud solution, they continually innovate new ways to analyze data stored on Amazon S3. Jun 24, 2015 List all the files in an S3 bucket. List all the files in an S3 bucket: file_list_s3.py 'You must supply arguments. ' YOU DID NOT SUPPLY A PREFIX. ALL ITEMS IN THE BUCKET WILL BE.

Checking if a bucket is public or private is easy. All buckets have a predictable and publicly accessible URL. By default this URL will be either of the following:

To test the openness of the bucket a user can just enter the URL in their web browser. A private bucket will respond with 'Access Denied'. A public bucket will list the first 1,000 objects that have been stored.

The security risk from a public bucket is simple. A list of files and the files themselves - if available for download - can reveal sensitive information. The worst case scenario is that a bucket has been marked as 'public', exposes a list of sensitive files, and no access controls have been placed on those files. In situations where the bucket is public, but the files are locked down, sensitive information can still be exposed through the file names themselves, such as the names of customers or how frequently a particular application is backed up.

It should be emphasized that a public bucket is not a risk created by Amazon but rather a misconfiguration caused by the owner of the bucket. And although a file might be listed in a bucket it does not necessarily mean that it can be downloaded. Buckets and objects have their own access control lists (ACLs). Amazon provides information on managing access controls for buckets here. Furthermore, Amazon helps their users by publishing a best practices document on public access considerations around S3 buckets. The default configuration of an S3 bucket is private. Amazon

The Research

My role at Rapid7 is providing penetration testing services for organizations that want to test the effectiveness of their security practices and identify potential areas of risk, as well as the likely impact of attacks in those areas. Having found public buckets on a number of assessments, and used them as part of my attack strategy, I was curious how common this issue of public buckets is, and what sorts of data we would find in exposed buckets.

Later on in the research process I discovered that someone else had already discussed the dangers of public buckets. Robin Wood previously blogged on the issue and published a tool to check the openness of buckets. Robin's work is excellent as usual and we tried to take it a bit further focusing on enterprises and buckets identified in web crawling results.

The Results

We discovered 12,328 unique buckets with the following breakdown:

Public: 1,951

Private: 10,377

Approximately 1 in 6 buckets of the 12,328 identified were left open for the perusal of anyone that's interested.

These 12,328 buckets were skewed towards those we could identify based on domain name, word list, or use within web sites. From the 1,951 public buckets we gathered a list of over 126 billion files. The sheer number of files made it unrealistic to test the permissions of every single object, so a random sampling was taken instead. All told, we reviewed over 40,000 publicly visible files, many of which contained sensitive data.

Some specific examples of the data found are listed below:

- Personal photos from a medium-sized social media service

- Sales records and account information for a large car dealership

- Affiliate tracking data, click-through rates, and account information for an ad company's clients

- Employee personal information and member lists across various spreadsheets

- Unprotected database backups containing site data and encrypted passwords

- Video game source code and development tools for a mobile gaming firm

- PHP source code including configuration files, which contain usernames and passwords

- Sales “battlecards” for a large software vendor

Much of the data could be used to stage a network attack, compromise users accounts, or to sell on the black market. Although more subtle, one of the other concerns was the number of publicly available log files.

The majority of the file listings were images (~60%). Although most images were fine there were a few different social media sites exposing many of their users pictures and videos.

Finally, there was a considerable number of text documents (over 5 million), including a surprising amount that contained credentials. Much of the documentation we spot checked was marked up 'Confidential' or 'Private.'

Data Gathering Techniques

In the first step we gathered a list of valid bucket names. A valid or invalid bucket can be determined by browsing to the bucket's URL; an invalid bucket will return an error of “NoSuchBucket.' We used the following combination of sources to gather lists of valid bucket names:

- Guessing names through a few different dictionaries:

- List of Fortune1000 company names with permutations on .com, -backup, -media. For example, walmart becomes walmart, walmart.com, walmart-backup, walmart-media.

- List of the top Alexa 100,000 sites with permutations on the TLD and www. For example, walmart.com becomes www.walmart.com, www.walmart.net, walmart.com, and walmart.

- Extracting S3 links from the HTTP responses identified by the Critical.IO project. This enabled us to identify s3.amazonaws.com and cloudfront.net addresses “in the wild”. It is very common for a cloudfront.net address to point to an S3 bucket.

- The Bing Search APIwas queried to gather a list of potentials.

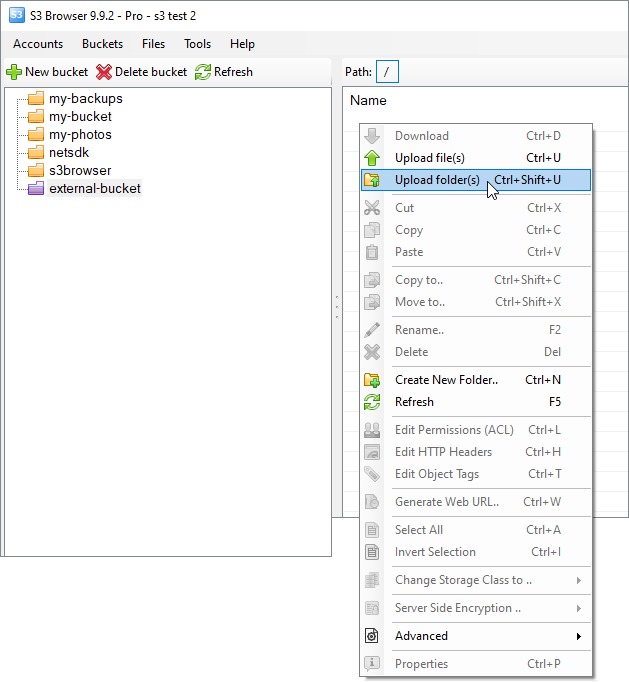

We then used custom tools to check the openness of the buckets. A file listing was pulled from all open buckets using the s3cmd tool. Finally, we analyzed the results and began to selectively download files. A majority of the available buckets were identified using Critical.IO scan data.

S3 Bucket List

Penetration Testing Pro-Tip

Although many buckets are now private, that doesn't mean they were always private. The s3.amazonaws.com site is regularly indexed by Google (unless the bucket itself includes a robots.txt) so Google dorks still apply. For example, the following Google query can identify Excel spreadsheets containing the word 'password':

site:s3.amazonaws.com filetype:xls password

Also, the WayBackMachine is a great resource to identify previously open buckets. Using a modified version of @mubix'sMetasploit module, I also quickly identified a few hundred buckets that are currently private that previously weren't.

Aws S3 Bucket List Files

Recommendations

This is pretty straightforward: check if you own one of the open buckets and if so, think about what you're keeping in that buckets and whether you really want it exposed to the internet and anyone curious to take a look. If you don't, remediation is quite simple for this one, and Amazon has made it even easier by helpfully walking through the options for you. We highly recommend you get on it now!

If you have any questions, post them in the comments section below and we'll do what we can to help.

We also created a Whiteboard Wednesday to provide a summary of what we found. If you're looking for an overview of this issue, the video is a good place to start:

Acknowledgements

S3 Bucket List Files

Robin Wood - for getting the ball rolling on S3 public bucket exposure.

HD Moore - when I said “we” above, this is typically who I was talking about.

The Amazon AWS security team - these folks have been extremely responsive, warned their users about the risk, and are currently putting measures in place to proactively identify misconfigured files and buckets moving forward. If only all service providers had a team this capable.

Updates:

Aws S3 List Buckets

March 29th, 2013: We clarified that the '1 in 6' statistic applies to the buckets we tested, which while accurate, is skewed towards those bucket names we could identify through the techniques outlined above. This ratio may not apply to all Amazon S3 buckets, but it was accurate for the buckets we identified.